From Anarchy to Optimizing

Software Development

Issue: July 1993

On small software projects, program quality is largely determined by the skills of one or two programmers who build the program. On medium and large projects, quality is also strongly affected by the development process within which the programmers’ skills are employed. This article explores an approach to improving software quality and productivity by making improvements in the development process.

The SEI Process Maturity Model

The Software Engineering Institute (SEI) was founded by the Department of Defense to study software development and to disseminate information about effective development practices. Part of the SEI’s charter is to try new techniques and see how they scale up from research settings into real projects. When a technique works, the SEI describes it so that practicing programmers can put it to use.

One area the SEI has studied is development processes. It has identified five levels of software process maturity, each of which represents a powerful increase in development capability over the level below it. Here’s an informal description of the levels:

Level 1 Anarchy. Programmers do what they individually think is best and hope that all their work will come together at the end of the project. Cost, schedule, and quality are generally unpredictable and out of control. A Level 1 organization operates without formal planning or programming practices. Projects are plagued by poor change control, and tools aren’t integrated into the process. Senior management doesn’t understand programming problems or issues. The official SEI term for this level is “initial.”

Level 2 Folklore. At this level, programmers in an organization have enough experience developing certain kinds of systems that they believe they have devised an effective software development process. They’ve learned to make plans, they meet their estimates, and their corporate mythology informally embodies their accumulated wisdom. The strength of an organization at this level depends on its repeated experience with similar projects, and it tends to falter when faced with new tools and methods, new kinds of software, or major organizational changes. Organizational knowledge at this level is contained only in the minds of individual programmers, and when a programmer leaves an organization, so does the knowledge. The official SEI term for this level is “repeatable.”

Level 3 Standards. At this level, corporate mythology is written down in a set of standards. Although the process is repeatable at this stage and no longer depends on individuals for its preservation, no one has collected data to measure its effectiveness or to compare it with other processes. The fact that the process has been formalized doesn’t necessarily mean that the process works, so programmers debate the value of software-processes and process measurements in general, and they argue about which processes and process measurements to use. The official SEI term for this level is “defined.”

Level 4 Measurement. At the measurement level, the standard process is measured and hard data is collected to assess the process’s effectiveness. The collection of hard data eliminates the arguments that characterize Level 3–the hard data can be used to judge the merits of competing processes objectively. The official SEI term for Level 4 is “managed.”

Level 5 Optimization. In the lower levels of process maturity, an organization focuses on repeatability and measurements primarily in order to improve product quality. It might measure the number of defects per thousand lines of code so that it could know how good its code was. At the optimizing level, an organization focuses on repeatability and measurement so that it can improve its development process. It would measure the number of defects per thousand lines of code so that it could assess how well its process works. It has the ability to vary its process, measure the results of the variation, and establish the variation as a new standard when the variation results in a process improvement. It has tools in place that automatically collect the data it needs to analyze the process and improve it. The official SEI term for this level is “optimizing.”

Benefits of Improving Your Process Maturity

Your organization can reap substantial, tangible benefits by improving its process and moving from Level 1 to a higher level. Lockheed embarked on a program to improve its software development process several years ago, and it has now reached Level 3. Here are its results for a typical 500,000 line project:

* Projected Results

Source: “A Strategy for Software Process Improvement” (Pietrasanta 1991a).

In the five years needed to move from Level 1 to Level 3, Lockheed improved its productivity by a factor of 5 and reduced its defect rate by a factor of almost 10. At Level 5, it will have improved its productivity by a factor of 12 and reduced its defect rate by a factor of almost 100. Lockheed’s figures for Levels 4 and 5 are projections, but they are similar to actual figures reported for an IBM group that is responsible for in-flight space-shuttle code and which has been assessed at Level 5 (Pietrasanta 1991b). Results similar to Lockheed’s have been reported by Motorola, Xerox, and other companies (Myers 1992). Hughes Aircraft reports that a one-time, $400 thousand investment in process improvement for a 500-person staff is now paying off at the rate of $2 million per year (Humphrey, Snyder, and Willis 1991).

In addition to the quality and productivity improvements, some software suppliers have improved their processes in order to demonstrate their commitment to quality. Government and private buyers are starting to ask for a supplier’s SEI maturity rating as part of their product purchasing processes. Because levels of maturity correlate with levels of product quality and because the quality of packaged software varies greatly, companies may soon refuse to buy products from suppliers that haven’t achieved high levels of maturity.

Survey of Organizations’ Existing Process Maturity

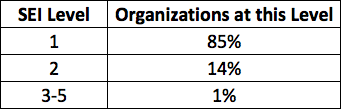

Considering the sizable benefits from doing so, you’d expect most organizations to strive for Level 5. In the keynote address at the Pacific Northwest Software Quality Conference at the end of 1991, Alfred Pietrasanta reported the following figures for the number of organizations at each level of maturity:

Source: “Implementing Software Engineering in IBM” (Pietrasanta 1991b).

As of late 1991, only about one percent of all organizations were performing at better than Level 2. One reason is that quality and productivity processes are less technical matters than they are organizational ones. To achieve major benefits, the entire organization must understand the importance of the development process and be dedicated to improving it.

If you want to improve your own organization’s process maturity, you should consider three factors that distinguish lower-level organizations from those at higher levels. The next three sections sketch three of these factors: repeatability (methodology), measurability (metrics), and feedback (optimization).

Methodology

Formally standardizing the development process is the key to improving from Level 2 to Level 3. You can standardize in a couple different ways. Most organizations codify the process that’s already being used by their best programmers. Other organizations try to impose a cookie-cutter methodology such as “structured methods” or “object-oriented methods,” but anecdotal reports suggest that imposing a completely new process is less effective than starting with an existing one.

Programmers who aren’t familiar with the benefits of using a formal methodology sometimes resist it. A methodology limits the full range of a programmer’s creativity and often adds overhead to the development process. If the only thing a programmer knows is that a methodology is simultaneously limiting and inefficient, no one should be surprised that the programmer complains about it. But within a larger context the rigidity and overhead of a defined process have value. Programmers should be made to understand that value so that they can consider its benefit before they complain about its restrictions.

Making the process repeatable also lays the groundwork for the measurement that’s needed at the higher levels. When you measure processes with wide variations, you’re never sure which factors are responsible for differences in quality and productivity. When a process is made repeatable by the use of a standard methodology, you can be reasonably sure that measured differences arise from the factor you varied, not from other factors that aren’t under your control.

Metrics

Formally measuring your process is the key to moving from Level 3 to Level 4. The term “metrics” refers to any measurement related to software development. Lines of code, number of defects, defects per thousand lines of code, number of global variables, hours to complete a project–these are all called metrics as are all other measurable aspects of the software process.

Here are three solid reasons to measure your process:

1. You can measure any part of the software process in a way that’s superior to not measuring it at all. The measurement may not be perfectly precise; it may be difficult to make; it may need to be refined over time; but any measurement gives you a handle on your process that you don’t have without it.

For data to be used in a scientific experiment, it must be quantified. Can you imagine an FDA scientist recommending a ban on a new food product because a group of white rats “just seemed sicker after they ate it?” No, you’d insist that they provide a reason like “Rats that ate the new food were sick 3.7 times as much as rats that didn’t.” To evaluate software-development methods, you must also measure them. Statements like “This new method seems more productive” aren’t good enough.

2. What gets measured, gets done. When you measure an aspect of your development process, you’re implicitly telling people that they should work to improve that aspect, and people will respond to the objectives you set for them.

3. To argue against metrics is to argue that it’s better not to know what’s really happening on your project. When you measure an aspect of a project, you know something about it that you didn’t know before. You can see whether the aspect you measure gets bigger or smaller or stays the same. The measurement gives you a window into that aspect of your project. The window may be small and cloudy until you can refine your measurements, but it’s better than no window at all.

You can measure virtually any aspect of the software-development process. Here are some metrics that other practitioners have found useful:

Useful Metrics

Size

- Total lines of code written

- Total comment lines

- Total declarations

- Total blank lines

Productivity

- Number of work hours spent on the project

- Number of work hours spent on each routine

- Number of times each routine is changed

Defect Tracking

- Severity of each defect

- Location of each defect

- Way in which each defect is corrected

- Person responsible for each defect

- Number of lines affected by the defect correction

- Number of work hours spent correcting each defect

- Amount of time required to find a defect

- Amount of time required to fix a defect

- Number of attempts made to correct each defect

- Number of new errors resulting from each defect correction

Maintainability

- Number of parameters passed to each routine

- Number of routines called by each routine

- Number of routines that call each routine

- Number of decision points in each routine

- Control flow complexity of each routine

- Lines of code in each routine

- Lines of comments in each routine

- Number of data-declarations in each routine

- Number of gotos in each routine

- Number of input/output statements in each routine

Overall Quality

- Total number of defects

- Number of defects in each routine

- Average defects per thousand lines of code

- Mean time between failures

- Number of compiler-detected errors

You can collect most of these measurements with software tools that are currently available. At this time, most of the metrics aren’t useful for making fine distinctions among programs, modules, or routines (Shepperd and Ince 1989). They’re useful mainly for identifying routines that are “outliers”–abnormal metrics in a routine that are a warning sign that you should reexamine the routine, checking for unusually low quality.

Don’t start by collecting data on all possible metrics. You’ll bury yourself in data so complex and unreliable that you won’t be able to figure out what any of it means. Start with a simple set of metrics such as number of defects, work-months, dollars, and lines of code. Standardize the measurements across your projects, and then refine them and add to them as you gain more insight into what you should measure.

Make sure that the reason you’re collecting data is well-defined. Measuring an aspect can be dangerous if that single measurement is not part of a carefully constructed set of measurements. If you measure only lines of code, you might suddenly find programmers creating programs with lots of defects. The extra effort that goes into the parts of the process that you measure comes out of the parts you don’t. A good approach is to set goals, determine the questions you need to ask to meet the goals, and then choose your measurements based on what you need to know to answer the questions. A review of data collection at NASA’s Software Engineering Laboratory concluded that the most important lesson learned in 15 years was that you need to define measurement goals before you measure (Valett and McGarry 1989).

Optimization

As noted earlier, the salient characteristics of the SEI organizational maturity model at its highest level are repeatability, measurability, and controlled variations and feedback. In a sense, this is an example of the scientific method. Before you can trust the results of an experiment, it must be repeatable. You must measure the results to know what the results are. When the experiment is measured and repeatable, then you can vary specific parameters and observe the effects. Because the process is repeatable, you know that any differences you measure come from the variation that you introduced. Software processes work the same way.

Suppose that you want to assess the effect of using inspections rather than walkthroughs. You’ve used walkthroughs consistently and collected data on the error-detection rate and the effort required for each error found. If you’re using a measured, repeatable process, when you substitute inspections for walkthroughs you can be reasonably certain that any differences measured are attributable to the use of inspections rather than walkthroughs. If you measure a 20 percent improvement in error detection and a 50 percent reduction in effort per error found, you can feed that result back into the process and change from walkthroughs to inspections. This is the way in which the Level 5 organization is optimizing–it’s constantly using data it collects to improve its process.

Summary

On all but the smallest software projects, the development process you use substantially determines the quality of your programs. The SEI’s process maturity model gives you a well-defined approach to improving your software process. The SEI model has produced dramatic improvements in quality and productivity for the companies that have tried it, and it seems certain to become important for more companies in the future.

Further Reading

Humphrey, Watts S. Managing the Software Process. Reading, Massachusetts, Addison-Wesley, 1989. Humphrey is responsible for the SEI’s five levels of organizational maturity. This book is organized according to process maturity levels and details the steps necessary to move from each level to the next. He gives much more rigorous and complete definitions of the process maturity levels than I have provided here.

Paulk, Mark C., et al. “Capability Maturity Model for Software,” SEI-91-TR-24. Available from Research Access, Inc., 3400 Forbes Avenue–Suite 302, Pittsburgh, PA 15213, 1-800-685-6510. This is the official SEI white paper that lays out the process maturity model described in this article.

Humphrey, Watts S., Terry R. Snyder, and Ronald R. Willis, 1991. “Software Process Improvement at Hughes Aircraft,” IEEE Software, vol. 8, no. 4 (July 1991), pp. 11-23. This fascinating case study of software process improvement includes a candid discussion of problems Hughes encountered in trying to improve its process and a useful list of 11 lessons learned in its improvement effort.

Grady, Robert B. and Deborah L. Caswell. Software Metrics: Establishing a Company – Wide Program, Englewood Cliffs, NJ: Prentice Hall, 1987. Grady and Caswell describe their experience in establishing a software-metrics program at Hewlett-Packard and tell how you can establish one in your organization.

Jones, Capers. Applied Software Measurement. New York: McGraw-Hill, 1991. Jones is a leader in software metrics. His book provides the definitive theory and practice of current measurement techniques and describes problems with traditional metrics. It lays out a full program for collecting “function-point metrics,” the metric that will probably replace lines of code as the standard measure of program size. Jones has collected and analyzed a huge amount of quality and productivity data, and this book distills the results in one place.

Other References

Myers, Ware, 1992. “Good Software Practices Pay Off–Or Do They?” IEEE Software, March 1992, pp. 96-97.

Pietrasanta, Alfred M., 1991a. “A Strategy for Software Process Improvement,” Ninth Annual Pacific Northwest Software Quality Conference, October 7-8, 1991, Oregon Convention Center, Portland, OR.

Pietrasanta, Alfred M., 1991b. “Implementing Software Engineering in IBM” (keynote address), Ninth Annual Pacific Northwest Software Quality Conference, October 7-8, 1991, Oregon Convention Center, Portland, OR.

Shepperd, M. and D. Ince, 1989. “Metrics, Outlier Analysis and the Software Design Process,” Information and Software Technology, vol. 31, no. 2 (March 1989), pp. 91-98.

Valett, J. and F. E. McGarry, 1989. “A Summary of Software Measurement Experiences in the Software Engineering Laboratory,” Journal of Systems and Software, 9 (2), 137-148.

Author: Steve McConnell, Construx Software | More articles