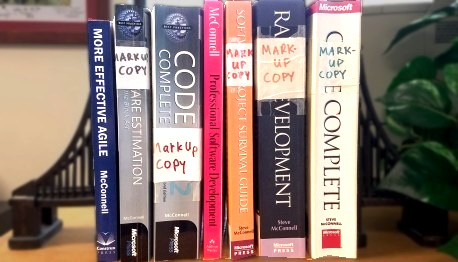

My Books

Here are summaries of the books I’ve written:

|

More Effective Agile: A Roadmap for Software Leaders. This distills my company’s experience working with hundreds of companies into an easy-to-read guide to the modern Agile practices that work best. |

|

Code Complete 2. (Now also an online course) A practical handbook of software-construction practices. Updated for web development, object-oriented development, agile practices, and other modern construction issues. |

|

Software Estimation: Demystifying the Black Art. A comprehensive set of tips and heuristics that software developers, technical leads, and project managers can apply to create more accurate estimates |

|

Professional Software Development. Essays about the software engineering profession. |

|

Rapid Development. Strategy and best practices for optimizing software development schedules. This book might still have some historical interest but is pretty out of date at this point. |

|

Software Project Survival Guide. A step-by-step guide to running a successful software project. This was a good book in its day, but today I would recommend an introductory Scrum book instead. |

|

Code Complete, 1st Edition. A practical handbook of software-construction practices. Superseded by Code Complete 2. This is still available as a new book on Amazon (inexplicably, since it’s been out of print for 15 years), but the second edition is strongly preferred at this point. |

| After the Gold Rush (out of print). Essays about creating a true profession of software engineering. This book has been superceded by Professional Software Development. |

If you like my books, you might also be interested in my magazine articles.

Check out the classes related to my books!